Status: Draft

Convolutional Neural Networks

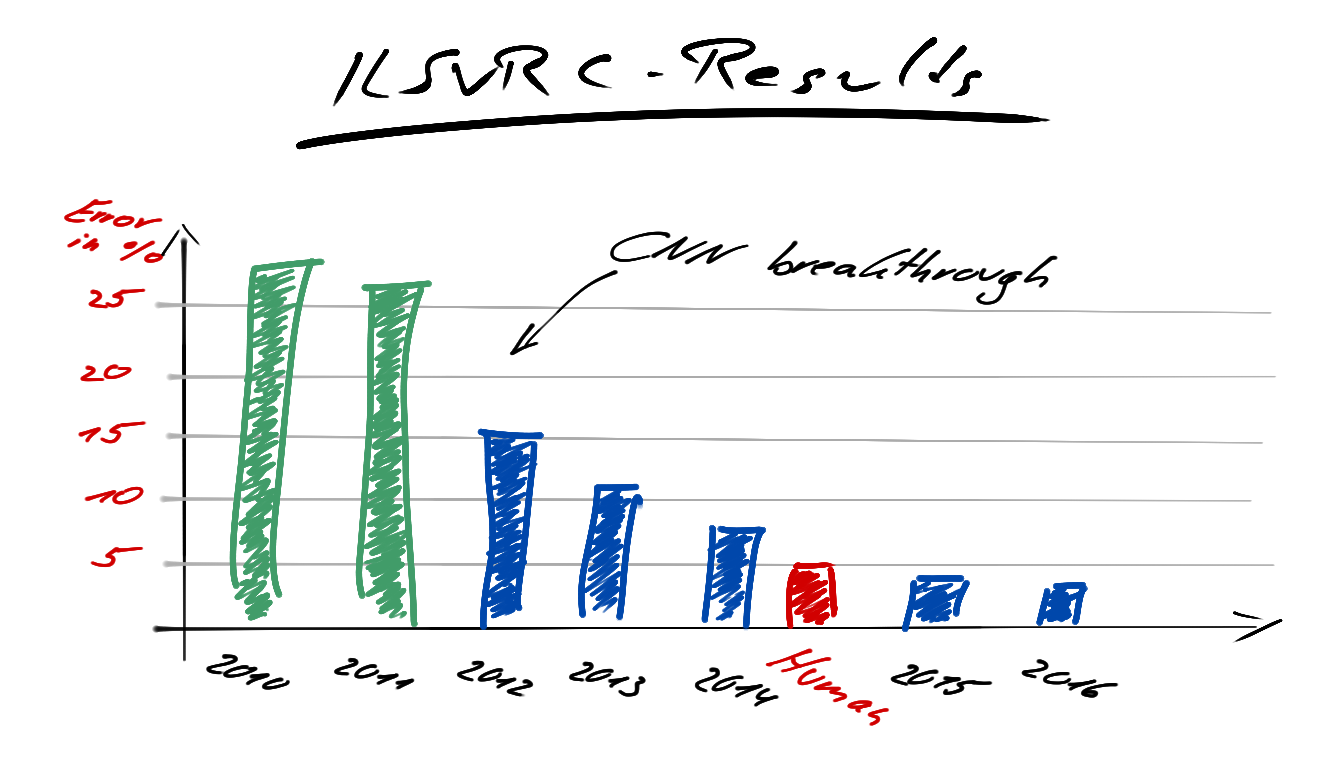

This course is all about convolutional neural networks. CNNs are the state-of-art technique for image classification since 2012, when a CNN won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), reducing the top-5 error from 26% to 15% [IMA12][ZIT17]. In this course you will first experiment with image classification using well known algorithms from the course Introduction to Machine Learning(highly recommended). Step by step, you will replace these with a simple fully connected feed forward network and also start on building your own neural network framework. Finally you will learn about 2D convoltion for neural networks and extend your framework with it.

Prerequisites

- Introduction to Machine Learning(highly recommended)

- Introduction to Neural Networks(recommended)

Notebooks

Before we start with neural networks for image classification, let us first try to solve the problem with tools we are already familar with. In this exercise you will classify images of handwritten digits with logistic regression, or better said, as we have more than two classes, softmax regression. Further you will learn about stochastic gradient descent (opposed to gradient descent) and for evaluation of your model, the accuracy and f1-score.

In Introduction to Machine Learning(highly recommended), you calulated the gradient by hand and just used the final formula. In this exercise you will learn how to just derive the single individual functions and chain them programatically. This allows to programmatically build computational graphs and derive them w.r.t. certain variables, only knowing the derivatives of the most basic functions.

Here you will learn to visualize a neural network given matrices of weights and compute the forward pass using matrix and vector operations.

So far you have classified images using Softmax-Regression and learnt the basics about the forward and backwards pass. Now move on by understanding an implementation of a simple fully connected neural network for image classification.

For a better understanding of neural networks, you will start to implement a framework on your own. The given notebook explains some core functions and concepts of the framework, so all of you have the same starting point. Our previous exercises were self-contained and not very modular. You are going to change that. Let us begin with a fully connected network on the now well-known MNIST dataset.

Befire starting with convolution in neural networks, do this exercise to get an understanding about 2D convolution and image filters, also known as kernels.

The following pen & paper exercise will provide you with the theoretical background about the vectorization of the convolutional layer.

In this exercise, you will continue to implement the neural network framework, that you started in exercise e06nnframework. At the end of this exercise, the framework should be extended by a convolutional layer and a pooling layer so that you can create a simple ConvNets. You want your operations, especially the convolution, to be efficient, so it will not slow down the training process to an unacceptable ratio. Therefore your goal is to implement vectorized versions of the layers in the exercise.

After finishing the exercise, you should have a working and efficient neural network framework. Do not forget to move all classes into the corresponding script files of the framework. A good follow up is to repeat your experiments on a dataset of your interest from exercise-nn-framework but with a ConvNet instead of a standard neural network.

One can never have enough training data. Additionally in real world problems, labeling the data by experts can become very expensive. The following exercise will teach you the most basic concepts for data augmentation with images.

Reference (ISO 690)

| [IMA12] | Official Website of the ImageNet Large Scale Visual Recognition Challenge as of 2019-01-10, http://image-net.org/challenges/LSVRC/2012/results.html |

| [Zit17] | Evon Zitzewitz, Gustav. Survey of neural networks in autonomous driving, 2017. |